The introduction and advancements of AI have undeniably arrived, as it’s stationed a foothold in the door of the world. ChatGPT has 300 million users worldwide, and there are no signs of slowing down anytime soon. However, the studied impacts it has on our environment are proving it to be a taxing commodity.

Whether in school labs or tucked away in basements, many of us recall old, bulky desktop computers. These towers, along with technology such as gaming consoles and cable boxes, heat up after prolonged use. This occurs as they convert electrical energy into the processing power required for games and browsing, generating heat in the process.

At the turn of more wireless and lightweight technology, those hunky boxes didn’t just disappear — well, figuratively. Processing capacity must be stored somewhere — the internet is often thought of as just being in “the cloud.” This couldn’t be further from the truth.

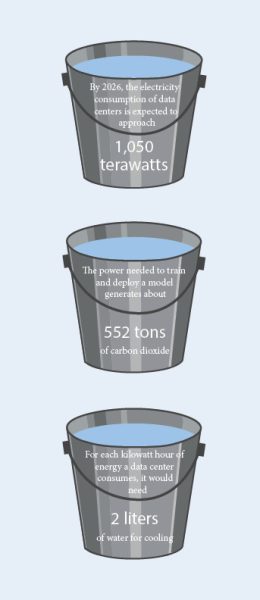

In reality, that processing power is now stored in gigantic networks of computers inside millions of square footage of data centers globally. ABi Research predicts that by the end of 2025, there will be over 6,000 public data centers worldwide and that over 8,300 will be in operation by 2030.

The computers in these data centers evidently get hot, and their cooling system utilizes water in order to keep the hardware from overheating. This is no secret, and has been a practice for quite some time.

The issue occurs here: a single ChatGPT query requires roughly 1500% more energy than a single google search query, depending on size. More heat means more water is needed to cool the computer systems storing the energy. AI servers require data center computers to run hotter. Much hotter.

Microsoft, whose Azure data centers run ChatGPT, said its annual global water consumption jumped to nearly 1.7 billion gallons, mainly due to its AI research. Ashish Kapoor, the Senior Policy Analyst for the Piedmont Environmental Council, stated that “One ChatGPT request is equivalent to pouring out a bottle of water or powering a light bulb for 15 minutes.”

Per the Washington Post, simply asking AI to craft a 100-word email requires 519 milliliters of water. Extrapolate this to ChatGPT’s 123.5 million daily users, and a staggering 17 million gallons of water would be used daily just for that — not to mention that most users enter more than one query and ask it to do things far more strenuous than compose a brief email.

The demand for water has sounded alarms over sustainability, especially in regions that already experience water scarcity. These data centers mostly source their water from municipal or regional water utility companies. While some of this water is recyclable, large amounts of stress are still placed onto water sources due to the sheer amount of energy required to run AI models.

The largest concern may be that AI — and its development — is contributing to an already suffering carbon dioxide emissions crisis. The computational power required to train AI software is staggering.

According to Mosharaf Chowdhury, a computer scientist and Associate Professor at the University of Michigan, “…training the GPT-3 model just once consumes 1,287 megawatt hours, which is enough to supply an average U.S. household for 120 years.”

In a different context, the process can emit roughly 626,000 pounds of carbon dioxide equivalent, nearly five times the lifetime emissions of an average American vehicle.

So, how can this be fixed, or at least reduced? Much of the heavy lifting must be done by AI manufacturing companies like Meta, Google and Amazon to ensure that cooling water is recyclable and that carbon emissions aren’t damaging our planet.

However, there is certainly personal accountability attached. AI can do many things, but it shouldn’t be writing your grocery lists, essays or emails. You shouldn’t be querying back and forth with ChatGPT like it’s your personal robot. If everyone trims their AI usage, our world will be much cleaner.